Taming the Chaos: Your Guide to Data Normalisation

Introduction

Have you ever felt like you were drowning in a sea of data, where every byte seemed to play a game of hide and seek? In the digital world, where data reigns supreme, it’s not uncommon to find oneself navigating through a labyrinth of disorganised, redundant, and inconsistent information. But fear not, brave data navigators! There exists a beacon of order in this chaos: data normalisation.

Data normalisation isn’t just a set of rules to follow; it’s the art of bringing structure and clarity to your data universe. It’s about transforming a jumbled jigsaw puzzle into a masterpiece of organisation, where every piece fits perfectly. Let’s embark on a journey to demystify this hero of the database world and discover how it can turn your data nightmares into a dream of efficiency and accuracy.

Data

in database design is the process of organising data to minimise redundancy. It involves dividing large tables into smaller, more manageable ones and defining relationships between them. This approach is guided by a series of normalisation rules or normal forms (1NF, 2NF, 3NF, BCNF, etc.).

Benefits:

- Reduces Redundancy: Separating data into multiple related tables prevents duplication.

- Improves Data Integrity: Ensures consistency in data storage, enhancing data accuracy and reliability.

- Easier to Update: Simplifies data management and reduces error-proneness.

- Efficient Use of Space: Eliminates duplicate data, saving storage space.

Use Cases:

- Complex Transactional Systems: Crucial for systems where data integrity and accuracy are paramount, like financial, inventory management, and CRM systems.

- Frequent Read and Write Operations: Essential where maintaining data consistency is critical.

- Relational Database Management Systems (RDBMS): Particularly effective for structured data in tables and rows.

Unnormalised Data

Unnormalised data is a design approach where data is stored without strict adherence to normalisation rules. It’s often about keeping data in a single table or a few denormalised tables.

Benefits:

- Faster Query Performance: Minimises the need for complex joins, boosting read performance.

- Simplified Data Retrieval: Makes data more accessible, streamlining certain types of queries.

- Optimal for Read-Heavy Operations: Ideal when the data structure is stable and infrequently modified.

Use Cases:

- Data Warehouses and Data Lakes: Targeted for quick read access, essential for analytical queries.

- Reporting and Analysis: Unnormalized structures are more efficient for data aggregation in reports.

- NoSQL Databases: Such as document stores or key-value stores, where scalability and high read performance are key.

As a Data Engineer, you’re likely to encounter both normalised and unnormalised data structures. In data warehousing scenarios with a star schema, denormalisation might be adopted for efficient querying. Conversely, designing transactional systems or operational databases might call for normalisation to ensure data integrity and avoid anomalies.

Your choice between normalised and unnormalised data hinges on various factors, including the system’s specific requirements, the nature of the data, performance considerations, and the tools and technologies at your disposal.

Below we will jump into Normalisation in depth, showing some of the 3 key forms of normalisation. These are layers rules, each built upon the other’s foundations. There are additional forms like 4nf and 5nf that additionally build on the below. However for simplicity we can start with the original 3.

Normalisation Demystified - Deep Dive

1NF (First Normal Form): Type 1 : Overwrite the value

In type 1 normalisation, we overwrite the old attribute value in the dimension row, replacing it with the current value. So the table always reflects the most recent assignment.

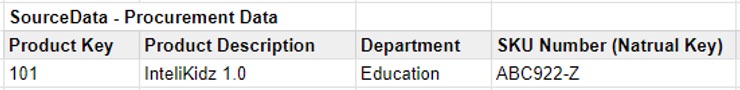

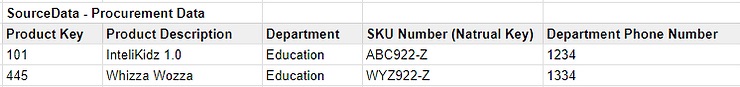

With the below table we will be using this data in all the examples

On Monday the data we bring in looks like below

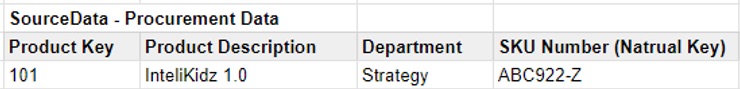

On Tuesday the data we bring in looks like below

As we load the data, the field Department would be overwritten and we would only see that Product 101 would now from Tuesday onwards belongs to Strategy department.

- Pros: Simple to implement, no additional rows

- Cons: Destroy historical data

2NF (Second Normal Form): Type 2 :Adding a History Row

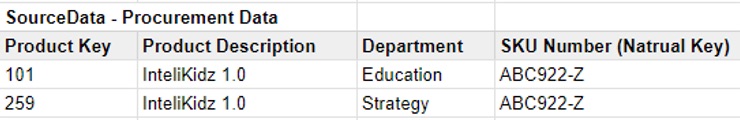

In Type 2 whenever a change has been detected in the data payload (all columns from source, that we are tracking change on, excluding Natural Key) we will add a row.

In this example as we detected the change in the Field Department, we add a new Product Row (new key) when the Natural Key is the same but either Product Description or Department data changed.

- Pros: Preserves full history, allows for time-based analysis

- Cons: Increase data volume, requires more complex queries

3NF (Third Normal Form): Type 3: Add a Column

Type 3 is used when there is a strong need to support two views of the world simultaneously. Although the change has occurred, we can act as if it didn’t.

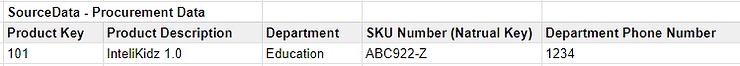

On Monday the data we bring in looks like below

On Tuesday the data we bring in looks like below

Now, a potential redundancy arises: both products belong to the “Education” department. If the Education department phone number changes, we’d need to update it in multiple rows, increasing the risk of inconsistencies.

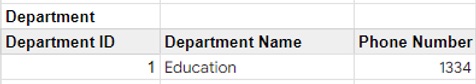

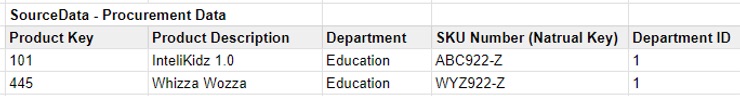

The 3NF solution would be to create a separate table called “Department”

- Pros: Reduces redundancy in phone numbers, so storing once, and can improve data consistency.

- Cons: Can lead to a larger number of attributes over time, making the table wider.

In the ever-evolving landscape of data management, normalisation is not just a process but a journey towards achieving clarity, efficiency, and integrity in your databases. From the foundational principles of the First Normal Form to the advanced intricacies of the Fifth, each step in this journey helps to transform your data from a chaotic clutter into a streamlined, powerful tool.

Remember, the quest for perfect data organisation is ongoing. It requires continuous evaluation, adaptation, and a willingness to embrace new methodologies. As Ralph Kimball and other data experts have shown, the path to data nirvana is not linear but a continuous cycle of improvement and refinement.

Let your databases not just store information, but tell a story — a story of accurate, reliable, and actionable insights. With each normal form applied, your data becomes more than just numbers and text; it becomes a well-orchestrated symphony, ready to empower your decision-making and drive your success.

So, embark on this journey with curiosity and enthusiasm. The future of data is bright, and with each step in normalisation, you’re unlocking its true potential, one table at a time.

Further Reading